Quantum computing: definition

Quantum computing can be defined as a technology that solves complex problems that neither traditional computers nor even supercomputers can solve. Or they do not have the capacity to do so quickly enough.

This branch of computing is based on the principles of superposition and entanglement, as we shall see later, and will be able to operate much more efficiently.

This new generation of computers takes advantage of quantum mechanics to overcome the limitations of classical computing.

It should be noted, however, that quantum computing will not completely replace current computing. Quantum computers will still need traditional computers to function and produce results.

However, quantum computing will complement all those cases where it really provides a substantial advantage, a concept known as quantum advantage.

What is a quantum bit?

To define what a quantum bit is, let’s go to the origin of the word itself, formed from the contraction of the English words quantum and bit, from which the term qubit arises.

With enough cubits, a quantum computer can process enormous amounts of information in parallel, something almost impossible for a classical computer.

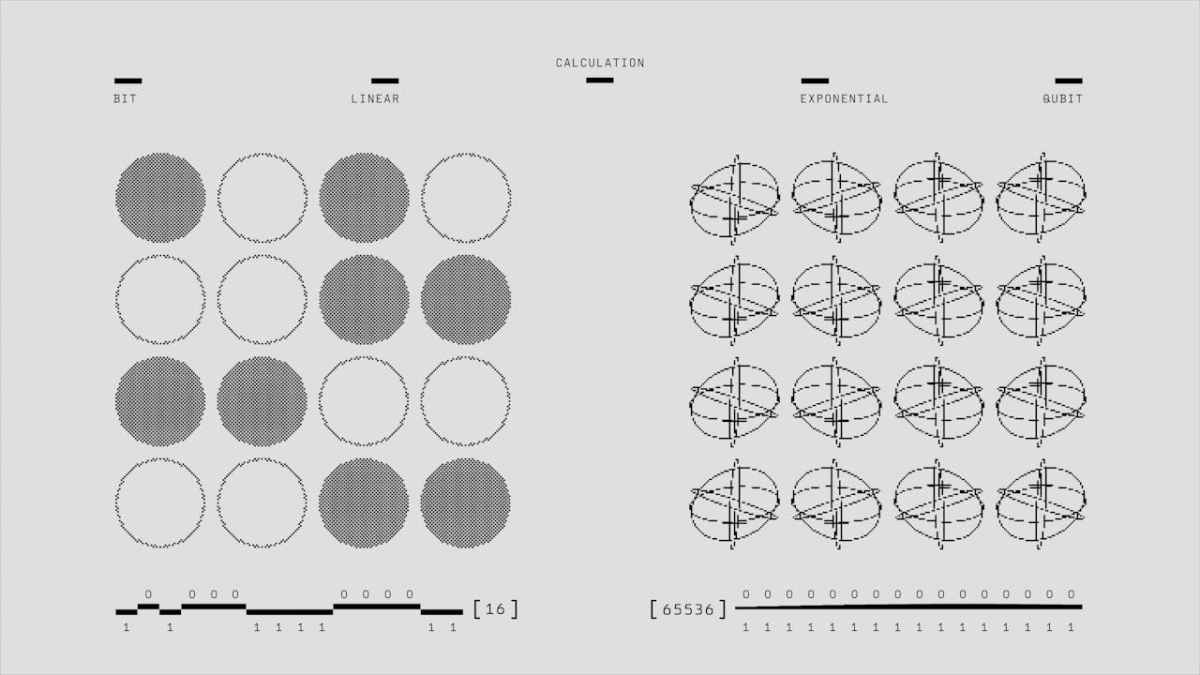

To be concrete, imagine a set of three bits in a classical computer, which can represent up to eight values. With three cubits, however, a quantum computer could represent all these combinations at once, multiplying its processing power exponentially.

This is the foundation of quantum power, the ability to process information in parallel thanks to superposition.

Difference between a bit and a cubit

But how do these quantum bits differ from traditional bits? Cubits can be in multiple states at the same time, as if they were 0 and 1 simultaneously, known as superposition.

Explained more technically, a bit is an electronic signal that can be either on or off, so the value of the bit can be either zero (off) or one (on). However, since the bit is based on the laws of quantum mechanics, it can be placed in a superposition of states.

Superposition, entanglement and decoherence

We have mentioned the term superposition, but what is it in this context? Together with entanglement and decoherence, they are the principles of quantum computing.

Let’s look at the main features of each of them.

Superposition

This concept is fundamental in quantum physics, and as we mentioned earlier in quantum computing, it is the essential feature of cubits.

This superposition is the feature that makes it possible for quantum computers to perform massively parallel computations because each quantum bit can represent multiple states simultaneously, as mentioned above.

Entanglement

This concept challenges the traditional understanding of reality by implying a deep correlation between two or more quantum particles, which means that the state of one particle is intrinsically linked to the state of the others, no matter how far apart they are.

Decoherence

The third principle is known as quantum decoherence, the process by which a quantum system stops from an initial superposition state, loses its quantum coherence and starts behaving like a classical system.

This decoherence arises from interactions with surrounding particles such as photons, molecules or atoms.